I.

The study is called “A Randomized Controlled Trial of Interleaved Mathematics Practice” and that’s exactly what it is. It’s one of the most readable studies I’ve read in a long time — the writing is crisp and there is a minimum of technical concepts. Not all papers do a great job bringing up potential concerns or counterarguments, but this one definitely does.

The short version of the study is that interleaving practice — basically, every problem is of a different type than its neighbor — was very effective at helping kids do well on a test. This is a trendy thing in teaching, but probably for a good reason. First (and most importantly) it seems to work. Second (and still importantly) it’s entirely uncontroversial; nobody seems particularly committed to the status quo of blocked (i.e. repetitive) practice. Third, it seems pretty easy to pull off.

Of the many things I loved about this paper, I especially loved the very clear definition of interleaved practice and why it might work. Here’s what they say:

- Interleaved practice is necessarily practice in choosing a strategy, not just practice executing that strategy

- It’s also necessarily spaced practice, in other words rather than practicing the same skill three times on Monday it’s better to space it out over three separate days.

- It’s also necessarily retrieval practice, which gets tossed around a lot but is used clearly by the authors to refer to practice recalling stuff from memory rather than getting the info some other way (like checking your work on the previous problem).

They also are not nutty; they get that blocked practice has its role. “Interleaved practice might be less effective or too difficult if students do not first receive at least a small amount of blocked practice when they encounter a new skill or concept,” they write. That seems correct.

(This is where the authors slot the literature on replacing practice with example or mistake analysis — alternating between examples and practice is for that initial experience, where a certain amount of focus on the same thing is absolutely necessary. The literature on worked examples and interleaving is sometimes seen in tension but I think this is probably the neatest way to resolve it.)

One question I still have is what this all looks like over the course of a unit, or over several weeks. The study required teachers to use nine worksheets over four months. So that’s about two days a month that are mainly devoted to review in this study. That seems doable. Except that I’m also the sort of teacher that uses a lot of low-stakes quizzes. How does that fit into the interleaved practice scheme? Should I count an interleaved quiz as interleaved practice — or maybe not, because of all the ways in which a quiz doesn’t give kids a chance to get help with problems they don’t remember how to solve?

I’m left with questions about how frequently to do a mixed review day, if I wanted to do things like they did in the study. But I think once every two weeks is sensible and doable, and I’m going to try to actually do that this school year.

II.

I’m reading this paper for kicks, mostly, but also with an eye on practice. I’ve known about the supposed benefits of interleaving practice for a long time but haven’t been entirely successful figuring out how to pull it off in practice. I teach four different courses and have limited bandwidth for making my own materials. I agree with the authors of this paper: “The greatest barrier to the classroom implementation of interleaved mathematics practice is the relative scarcity of interleaved assignments in most textbooks and workbooks.”

I promise I’ll get to the paper in a second, but first a quick note about the sentences that follows-up the one I just cited. They continue: “There are some remedies, however. For instance, teachers can create interleaved assignments by simply choosing one problem from each of a dozen assignments from their students’ textbook. Teachers might also search the Internet for worksheets providing ‘mixed review’ or ‘spiral review,’ and they can use practice tests created by organizations that create high-stakes mathematics tests.”

It’s entirely typical of research to never getting around to studying those remedies in any systematic way, but shouldn’t they? The work of translating something like interleaved practice into something workable in the classroom requires a lot of creativity. I know that there doesn’t seem to be anything interesting about that first suggestion (“choosing one problem from each of a dozen assignments from their students’ textbook”) but I find myself with questions: would these teachers be rewriting the problems? how do they choose the skills? do they do any blocked practice, and do they have a way of keeping track of which problems they’ve already used? are there clever ways of reducing the workload?

I get it, that’s just not what this study is. I have absolutely no issue with this study (which impressed me). But wouldn’t it be nice to read something as systematic and careful as this about the remedies that make translating this research feasible?

III.

The goal of this study was to test the feasibility of interleaved practice in realistic conditions. So, unlike a lot of research on practice, this was happening in classrooms. Actually, a lot of classrooms: 54 of them, all 7th Grade.

This study was preregistered, and you can tell, because the authors have a rationale for every single decision they made. It’s refreshing and entirely clear.

You’d think this would make the paper a tedious read, but quite the opposite, it felt like you were listening to actual humans explain their actual thinking. Honestly, I found it sort of gripping. I loved all the little touches.

They used statistical software in advance of the study to decide that they needed around 50 schools to trust their results. They ended up recruiting 54 schools, and paying them each $1000 to participate in the study (I wonder where that $1000 went) and then they had to recruit teachers. Each of the teachers got tossed $1000 also, and honestly that feels like a sweet deal. I would very much like to be paid $1000 for some researchers to write worksheets for me. They seemed to have no trouble finding 7th Grade teachers who wanted in on the study.

And now, for my favorite detail from the study:

“We recruited teachers who taught a seventh-grade math course described by the school district as Honors Advanced Grade 7 Mathematics.”

WOAH, huge red flag here. So this whole study was just with the top of the top of math students in the district?! I can’t believe that they would do this…

Oh, wait.

“Although its title suggests that the course is selective, it is the modal course for seventh-grade students at most of the schools in the district.”

So, this is hilarious. Some 7th Graders take Algebra, and those who failed the Florida assessment are in a different course. But the totally normal, typical 7th Grade math course in this district is titled Quantum Honors 7th Grade Category Theory for Gifted Youngsters. Florida sounds amazing.

They recruited only teachers with multiple sections of 7th Grade math. The researchers did the obvious thing of randomly assigning one of the teacher’s sections to the blocked practice condition and the other to the interleaved practice. (If you’re asking yourself how the researchers handled teachers with an odd number of sections, this is the paper for you.)

The worksheets seemed a nice size: 8 problems each. But then again they would be, because the authors did a pilot study with two experienced classroom teachers they have long-term relationships with. (This paper really is charming the pants off of me.)

They did thoughtful things that should help any skeptical readers. My favorite: both conditions had the exact same final worksheet before the exam. That way, no group of students could be said to have not seen the skills in a long time. (Otherwise, because of the nature of blocking, it would have been a couple months since students saw the practice problems for the first skill. Also this closely resembles the status quo practice of interleaved practice before the exam — though the gap of 33 days between review and test would be unusual in pretty much every class.)

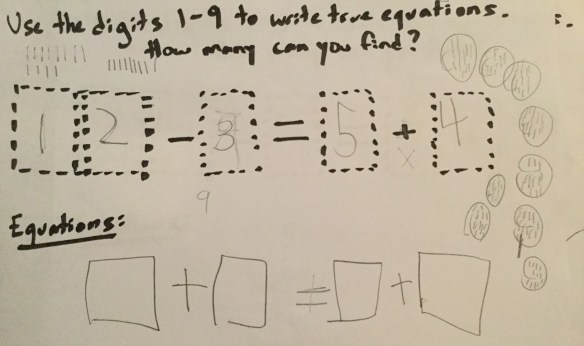

Here’s the figure explaining what they did:

(Notice how the interleaved practice worksheets have a bunch of filler skills that are mostly blocked? And the blocked practice have a bunch of filler worksheets that are mostly interleaved? And that only the colored “core” skills were assessed on the test? That’s because the researchers didn’t tell the teachers what the experiment was about and wanted to make sure they couldn’t figure it out on their own. Clever!)

I complained above about wanting to know the practical details of designing these worksheets, but honestly it’s not that big a deal. It’s true, I’d rather not spend my planning time making new worksheets…but, yeah, I’m frequently making new worksheets during my planning time. The bigger question for me is about keeping track of how many skills there are in the course and all that, which I find logistically sort of complicated in the heat of the school year.

Given the particulars of my teaching situation, maybe the best thing to do would be to formally schedule a practice day every two weeks, and a quiz on the other weeks? Maybe on Fridays, which are breakneck and hectic for me?

IV.

Whenever I read a paper I always feel like I owe it to myself to try to understand the trickier statistical points. This is inevitably embarrassing because I don’t understand these things well yet. Oh well.

First up:

“In order to determine the necessary number of participating classes, we conducted a priori power analyses with Optimal Design software. Each analysis assumed a two-tailed test with an alpha level of .05 and a two-level, random effects model for a continuous outcome variable. We ran numerous analyses with varying values of effect size and intraclass correlation, all of which were more conservative than the values obtained in the pilot study. In every scenario, power exceeded .95 with 30 classes (15 per condition).”

There are a lot of concepts here and I’m barely fluent in statistics-ese. I will try to translate the above paragraph into plain English-ese:

If we only used 10 schools, this study would have been underpowered. In other words, our study wouldn’t necessarily be large enough to detect the effect of the intervention, even if interleaved practice does help. So we used software to help us decide how many schools would need to be part of this study.

What went in to that calculation? First, we put in the standard “alpha” (which is our threshold for how unlikely it would be to get our effect randomly). As is (for better or worse) standard in many studies, that’s 0.05. (I don’t entirely get this yet, but power calculations are done in reference to the acceptable alpha. More here.)

We also had to consider the fact that even though our results are framed in terms of the test results of individual students, the intervention is taking place at the classroom level. Since students are grouped in classrooms we can’t just think of each additional student in the study as contributing equal amounts of random variation. Fundamentally, what protects us against making error is the chance for students to randomly deviate from each other — if their performances are correlated because they have the same teacher, we get more of a chance that we’re not seeing a real effect at all.

Instead, we told the software how correlated we thought the results of students in the same classroom would be. With that info we played around with the software to see what we would need to design a strong study. After tinkering with different inputs into the software, we settled on 50 classrooms.

(I found this example useful for helping me understand statistical power more clearly.)

Next up:

“Because of the cluster design, we further examined test scores by fitting a two-level model (students within classes) with HLM Version 7.03. Using restricted maximum likelikhood (REML) we first estimated a fully unconditional model to evaluate the variability in students’ scores within and between classes. To assess the difference between conditions, we used REML to estimate a two-level random-intercept model. Tests of the distributional assumptions about the errors at each level of the model (normality and equal variance) did not reveal any violations.”

My attempt:

The way we’re thinking about the results here is that there is an effect from interleaved practice, but this is an effect on the classroom. Then, within that classroom, there is random variation from the students .

Groups are funny things, statistically speaking. Sometimes one group can outperform the other but there is a tremendous amount of variation within the group. Suppose that we only looked at the classrooms to decide that interleaved practice was more effective — wouldn’t it still be possible that a lot of the students in the interleaved classroom did worse? And wouldn’t we want to know that?

Put it the other way, though, and we only looked at the students who received the interleaved treatment as one big group, ignoring the classes they came from. If they outperformed the blocked practice group, shouldn’t we worry that maybe this came from just one of the classes? Maybe a bunch of the classes couldn’t handle the interleaved condition at all, but there was one teacher who pulled it all together?

So what we do is we use two different models. First, we treat everyone like individuals and see how much variation there was with regard to scores on the test. Then, we look at the classrooms for the same thing. Finally, we combine the between-student variability into the classroom correlation.

We used a magic formula called “REML” to do this.

I tried, really, I did. One last effort:

The level-2 class model included a dummy variable for condition and 14 dummy variables for teacher effects. Before examining the main effect of condition, we evaluated the potential interaction between teacher and condition and found not statistically significant interaction effects, p>.05. We then tested a main effects model that evaluated the effect of condition, controlling for teacher effects, and we found a significant effect of interleaving (p < .001).

OK, I can’t do this one. Does this just mean that they checked to make sure there was no significant relationship between who taught the classes and the test scores? So that the interleaved practice results aren’t the result of perhaps a few stronger teachers ending up with more of the interleaved classes randomly?

V.

I was recently hanging out with some teacher friends, and they playfully told me that I was a bit of an academic troll to Jo Boaler. But I have hidden this criticism at the bottom of the post, so you can forgive me for connecting this paper to another little critique.

I had raised some questions about this paper of Boaler’s, titled “Changing Students Minds and Achievement in Mathematics: The Impact of a Free Online Student Course.” The paper records an experiment, but it has a lot of things that made me nervous. Perhaps most mysterious is this very fishy table:

Why did 200 more students end up in the control group than the treatment? Why doesn’t the paper mention any of that, not even a tossed-off explanation? A possibility, though not the only one, is that there was significant attrition from the treatment group because of the difficulty of the treatment. That could potentially impact the reported results. What if it was the most difficult to manage classes that dropped out of the treatment, while their other classes stayed in the control group?

I don’t want to go in depth on Boaler’s paper, which is about something else entirely. But I think anyone interested in reading research could have some fun reading this interleaved practice experiment side-by-side with Boaler’s piece, because they make for a really rich compare/contrast pair.

They’re both experiments, but one is carefully, carefully constructed, and it’s convincing because they bring up potential issues and have an explanation for every decision they made. This other paper contains lacunae that never end up explained.

Research is ideally designed with the skeptic in mind. You’re supposed to be able to read research skeptically. The whole point of research is to be able to withstand that skepticism and leave with your thinking changed, in some way. This is partly a matter of design, but it’s largely a matter of the writing itself which should be clear, generous to the reader, and eager to raise concerns.

So: not all experiments are created equal, and not all papers are either. This interleaved practice is a good one for both.